The maximal model of spatial hearing, also called Jeffress’ place theory, proposes that individual neurons are ‘labeled’ by the spatial cues that evoke maximum responses. Maximal activities evoked across many such neurons, each having a slightly different label, consequently amount to a topographic space map or neural image.

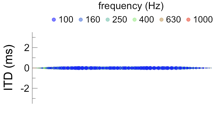

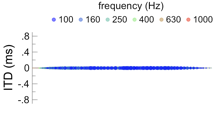

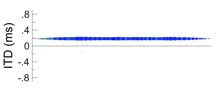

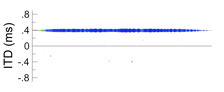

As with numerous other acoustic and neural models, the maximal model performs reasonably well when representing interaural time differences (ITD) under ideal listening conditions or when considering binaural signals exemplary of ideal listening conditions. In the examples below, for instance, five single or recurrent interaural phase differences (IPD) are considered: -0.2, -0.1, 0.0, 0.1, or 0.2 cycles, where IPD is estimated as ITD divided by frequency.

Under the maximal model, ipsilateral directions are unencoded by modeled neurons within each hemisphere, a finding that favors the inter-hemispheric channel model over the maximal model. On the other hand, equivalent activities evoked along the medial and lateral slopes a spatial selective neurons do not produce ambiguous representations, allowing peripheral ITDs to be encoded at slightly higher frequencies in comparison to the inter-hemispheric channel and edge models.

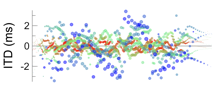

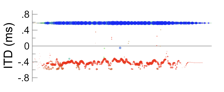

Like the cross-correlation and inter-hemispheric models, the maximal model becomes less tenable under typical listening conditions, or when considering binaural signals exemplary of typical listening conditions. The first issue is that the maximal model omits a mechanism or strategy for interpreting activity patterns evoked across labeled neurons. A mechanism or strategy for ‘reading the space map’, as it were. The second issue is that a verbatim interpretation of activity patterns is inconsistent with psychoacoustic studies. When spatial cues fluctuate and are broadly distributed over time, for example, maximal activities remain congruous with frontal directions. Yet studies demonstrate that auditory images typically split into two distinct images (or two distinct ‘edges’) when distributions of spatial cues are broad enough. In the example below, for instance, a hypothetical distribution of five IPDs is considered (0.0, -0.1, 0.1, -0.2, and 0.2 cycles), resulting in a broad increase in activities across the neurons.

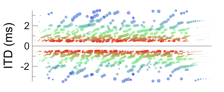

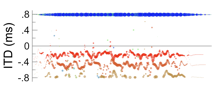

If a broad pattern of activity prompts listeners to report a split auditory image (or image with split edges), what should listeners report when this pattern is inverted, so that maximal activities are evoked by neurons encoding peripheral directions? In other words, what should listeners hear when a convex activity pattern is exchanged with a concave pattern? In the example below, for instance, IPDs of -0.5 and 0.5 cycles are considered, as would arise if IPD were 0.0 cycles but polarity was inverted.

Under the maximal model, a concave activity pattern should, presumably, prompt listeners to report hearing two distinct images (or distinct edges) even more frequently than they do for a convex pattern. Listening to signals exemplary of such conditions, however, demonstrates the opposite. Spatial hearing certainly broadens when signal polarity is inverted, yet images are considerably less broad (more compact) in comparison to when different noises are played in the ears causing spatial cues to fluctuate. Inverting polarity also results in a less definitive splitting of a single auditory image into two or more distinct images (or edges).

Pros:

• Intuitive given selectivity for spatial cues in midbrain neurons.

• Intuitive because visually identified objects are typically described a having a single spatial location.

• Spatial ambiguities do not arise due to equal activities evoked along the medial and lateral slopes a spatially selective neurons.

Cons:

• Maximal activities encode only contralateral directions, leaving ipsilateral directions unencoded.

• Inconsistent with spatial hearing when spatial cues fluctuate or when signal polarity is inverted.